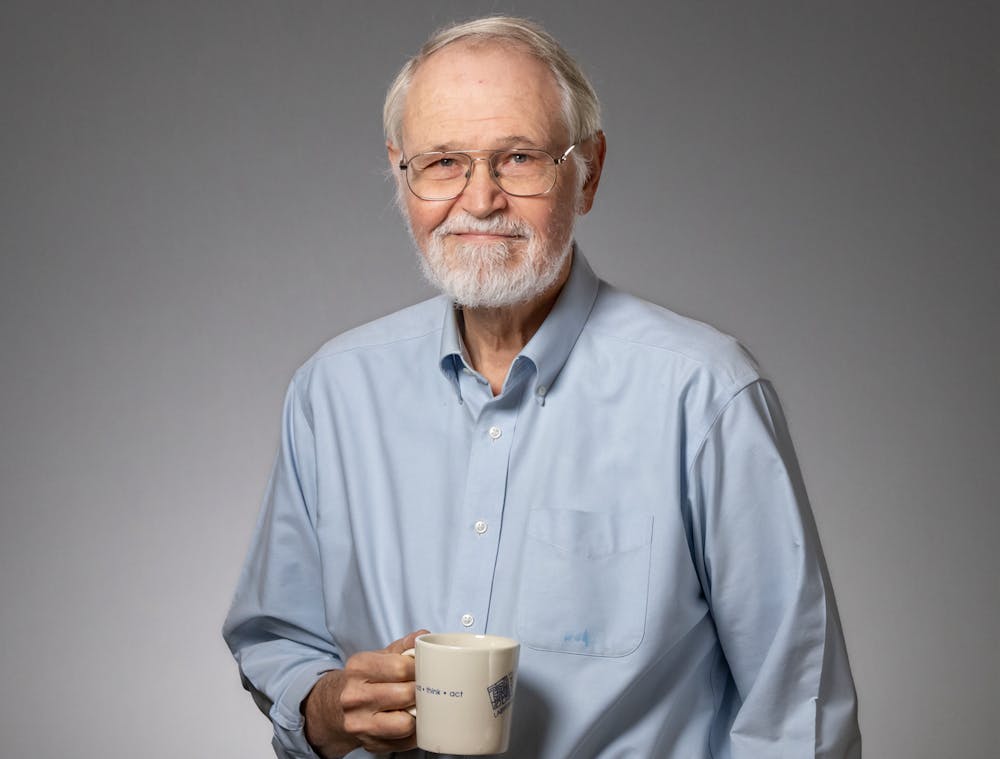

After attending Princeton as a graduate student in the Department of Electrical Engineering in 1969, Brian Kernighan returned to the University in 2000 as a professor in the computer science department after spending time at Bell Labs, a renowned computer science research center. He has reached students across disciplines and rethought teaching methods of introductory computer science in the age of AI.

The Daily Princetonian spoke with Professor Kernighan to learn more about his journey to Princeton, his approach to computer science education, and his perspective on how AI might shape the future of student learning.

This interview has been edited lightly for clarity and concision.

Daily Princetonian (DP): What initially drew you to the field of computer science?

Brian Kernighan (BK): That was a long time ago. I was in college when I saw a computer for the first time, but I found it kind of interesting, not knowing much about it. It was a device that seemed to flash lights, but people thought it was interesting, and somehow or other I blundered into learning a bit of programming.

DP: What led you to start teaching at Princeton, and why did you decide to stay?

BK: I came here as a graduate student and spent four and a half years basically across the road in [the] E-Quad. When I graduated, I went to Bell Labs, which is up in North Jersey. I spent 30 years there, and then I came back to Princeton [for] computer science. So, it’s not a typical path.

I had a great time at Bell Labs. For probably 25 to 30 years, it was really interesting, and I had a lot of fun. But then it was starting to get a bit tiring. The company changed. The world changed around it, and I had a chance to do some teaching. I taught CS 50 at Harvard, which is an enormous class. I had also taught at Princeton as an adjunct professor — coming in and doing one course per semester — and I spent a year as a visitor, and that was a lot of fun. So, when [Princeton] made me an offer, I took it. [Returning to Princeton] was like a second childhood, or something like that.

It’s a lot of fun. You get to meet a lot of interesting students, you get to hang out with really interesting colleagues in computer science and people in other parts of the University. Continuously learning new things helps keep you, well, maybe not young, but maybe not aging as fast.

It’s partially the teaching part I enjoy, but I also do a lot of independent work. Advising, in general, is fun. I just had a meeting at Forbes, because I’ve been doing academic advising at Forbes for a long time, gearing up for yet another group of freshmen who show up in the fall.

DP: Why did you decide to leave Bell Labs?

BK: When I first went there, the world was very young. These were very early days of computing, so that meant there were all kinds of interesting things to work on. As the field matured, to some extent, that became less true. The other thing is that when I went there originally, Bell Labs was really pure research. You could pick something that was interesting, you could work on it, and nobody said, “Well, what have you done for us this quarter?” or that kind of very short-term thing. That was changing, and I started to get a little restless.

The company itself also had major changes in the way that it did business. It split itself into multiple pieces, and the financial mechanisms started to change. You had to find your own research money. These things didn’t affect me directly, but they were in the air, and I thought maybe it was time to think about something different.

Additionally, I enjoyed the teaching that I did, in particular in the big class at Harvard. I also learned that I could handle the mechanics of a big class, which is something I didn’t know until I tried, so I didn’t have to worry about that. This was my “baptism of fire,” is the cliché saying.

DP: How have your experiences here influenced your own work and/or approach to computer science education?

BK: One course which I’ve taught a few times is COS 109: Computers in Our World. And the people in that are definitely not technical. They’re people, let’s be honest; they’re taking it to check off the QCR requirement. But trying to explain what computing and communication technology is, how they work, how they change the world around us, and how you should react to them, to people whose interests and expertise are just somewhere else, is interesting and fun. It’s fairly rare that somebody takes this course and goes on in computer science, but, boy, they’re good at stuff. So it’s fun to try and explain [computer science] to them, and then to learn what they like to do in their field and watch them do that well.

DP: Throughout your career, you’ve emphasized the importance of interdisciplinary studies and making computer science accessible to those from non-technical backgrounds. What inspired this approach, and how have you seen it impact students from diverse fields?

BK: COS 109 introduced me to lots of people — not just students, but also to faculty members who are in other fields as well. Over the last 10 or 20 years, computing has become an important part of [other] academic discipline[s]. I have gravitated toward people who are doing that sort of thing. I taught a course with Effie Rentzou in the French and Italian Department, and one with Meredith Martin in the English Department on data in the humanities. And I’ve been involved with the Center for Digital Humanities basically since it began. That’s a way of looking at information that comes from other fields.

I also teach independent work seminars here. The students in them are almost entirely JP juniors in computer science, and the topic is digital humanities, and that’s their independent work. They’re looking at all kinds of weird and interesting data that comes mostly from the humanities. In other words, it’s not financial data. It’s not Wall Street, ORFE kind of data, nor is it the kind of data that you would get from physics experiments. It’s more weird things about what people do, properties of books, history, people’s social interactions, and more. The students in those classes do data analysis. They explore the data, they look for patterns, and they try to learn something interesting. They are technical, but they have interests in other things as well.

DP: With AI and machine learning playing an increasingly prominent role in the modern day, what do you see as the biggest challenges and opportunities for the next generation of programmers?

BK: I wish I knew what was going to happen there. If you think about it, the large language models like ChatGPT have been around for less than two and a half years. They have changed the world — particularly the academic world. They’re a lot more sophisticated today than they were when they first came out, and they already were pretty remarkable then. I think it’s one of those questions nobody has a good answer to. We discussed it when I was over at Forbes today. How should classes deal with the use of large language models? Should you be allowed to use it to write a paper? And [when you] take that sort of question and apply it to all the different courses that you might take, the answers, I think, are not the same.

In COS 126, for example, you’re not supposed to use any of these kinds of things to solve the programming assignments. I think that’s fair. But if you get out in the real world, people use those things routinely because they give you some advantage, a lever that lets you deal with bigger problems. How do you balance that? The short answer is, I don’t know.

In my class last fall, I told students that they could use generative AI to get a better understanding of what was going on. They could not use it on problem sets. It was crystal clear that people used it on problem sets anyway because they would turn in assignments that would have been too sophisticated for a 126 student. We then had a discussion in class about the trade offs — you got the right answer to the problem, but you didn’t understand what you were doing.

But that’s one answer for one part of one class. There are just so many variations. The guidance that comes down from on high is basically, every instructor has to figure it out for himself or herself. The other piece of guidance is to make it crystal clear to the students what the rules are. But the rules are different in every single class, and that’s hard.

DP: If you could give one piece of advice to students and aspiring programmers today, what would it be?

BK: Learn how to do it yourself. Use the mechanical aids, but learn what you’re doing. A general problem with these large language models is that they will give you confident answers that are total nonsense, and if you don’t understand what you’re doing well enough, you will not recognize what the nonsense is.

The best that I could probably do is to make students aware of AI so that they can use it effectively when it makes sense and be wary when it doesn’t. In COS 109, I used to have programming assignments. You can’t do that anymore because students can just look them up. I changed some of the lab assignments to a form that can be done by hand. For lab two, I told students to get an AI model of some sort to do it for you. Then, I asked them to look and see what happened, what worked, what didn’t work, and what they learned from that experience. Somebody who pays attention to the assignment, as opposed to just checking the work off, might learn something from that experience. But I think this is going to be a moving target, so I try to keep ahead so that people are encouraged or forced to learn as well.

Learn where AI is useful and when you shouldn’t be using it, and learn enough so that you can detect when it’s given the wrong answers, when it’s hallucinating or lying.

Angela Li is an assistant Features editor for the ‘Prince.’

Please send any corrections to corrections[at]dailyprincetonian.com.