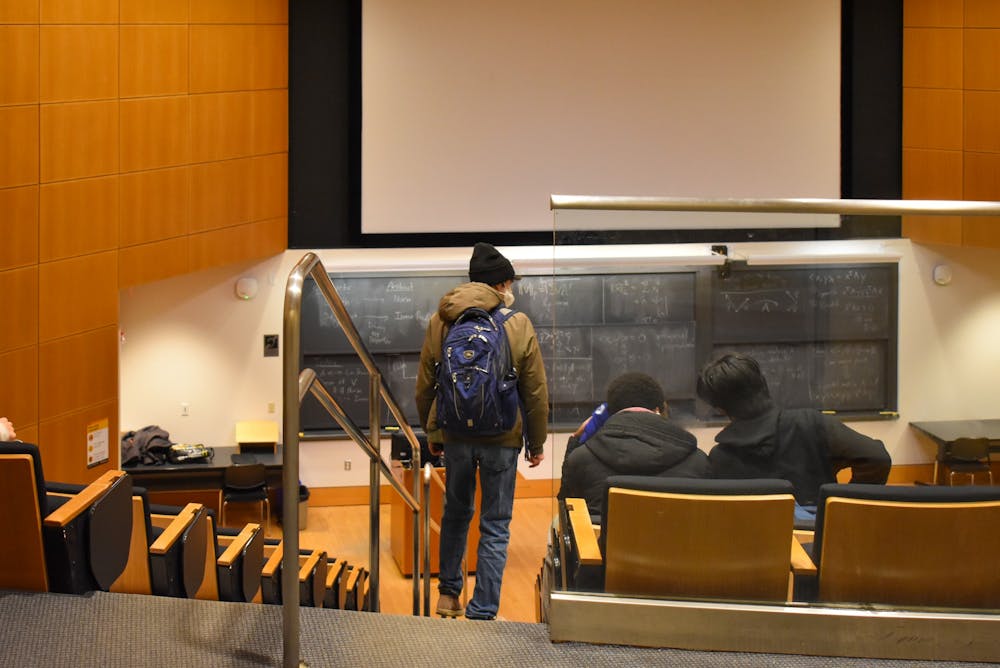

Recent coverage of ChatGPT, a large language model developed by OpenAI that uses the power of machine learning (ML) to generate responses to text prompts, has primarily fallen into one of two camps: those that assert the death of the college essay and those that hail a new era of streamlined education where students are freed from mucking through first drafts. My perspective is more realistic and lies somewhere in between: the limitations of ChatGPT are significant enough that it can and should serve as a helpful tool, but it won’t be able to kill the college essay or revolutionize much of anything, at least in its current form.

Last month, Senior Columnist Mohan Setty-Charity ’24 urged the University to be cautious before rushing to ban tools like ChatGPT. He is correct, but not because artificial intelligence (AI) will replace the college essay for Princeton students. Instead, we should use the advent of this tool to question whether our homework assignments are just asking to create coherent text rather than develop coherent ideas.

To start, GPTZero is not particularly effective at writing college essays. As advanced and sophisticated as language models like ChatGPT may seem, it is important to remember that they are just algorithms trained on vast amounts of data. Further, as computer science professor Arvind Narayanan and PhD candidate Sayash Kapoor recently explained, they can easily become "bullshit generators" if we don’t use them with caution. This is because ChatGPT, like any AI model, is only as good as the data it is trained on. If the model is trained on a dataset that contains inaccuracies or biases, it will reflect those in its generated text. Further, the actual output of ChatGPT is based on the probability of certain sentence constructions, not any measure of truth. This means that any text produced by ChatGPT, while potentially helpful in terms of finding content or ideas, needs to be thoroughly checked for accuracy and precision before being submitted as student work. In my experience, over a longer stretch of text, it doesn’t appear that GPT says anything beyond the superficial. Even those with heavy reliance on the tool for written work will still have to undertake a great deal of original thinking to create any assignment worthy of merit.

Given this, if students are being assigned essays that can be written by ChatGPT, perhaps it’s not a good assignment in the first place. ChatGPT begs us to rethink the purpose and value of homework. Some would argue that ChatGPT subverts the purpose of a good education by providing students with instant, personalized assistance on a wide range of subjects and topics. Yet if Princeton homework can be completed by a machine, what is its true value?

Instead of assigning homework as a way to evaluate learning, it should be used as an opportunity to challenge students and their ability to extend upon core concepts, while generating original arguments and insights. ChatGPT can be used to help students achieve the bare minimum, but Princeton courses generally have a more ambitious aim: to teach students to think critically, solve problems, and apply what they have learned in new and creative ways. That is something that ChatGPT cannot do well, and, consequently, any student will still find themselves “filling in” critical thinking for their essays. AI is simply a tool, and, like any tool, it needs to be used with caution and in conjunction with human intelligence. The aspects of homework that are actually valuable won’t be lost if students draft individual parts of essays with ChatGPT, then edit them. In fact, this represents a valid and time-efficient way of completing written work that avoids the minutiae of drafting while preserving the voice and original thinking of the writer.

The sure sign of pure ChatGPT output that will show through in any written work is a lack of critical thought. A ban is not necessary for students to face the consequences of an unedited draft. On the other hand, if a ban on ChatGPT were put in place, teachers may rely on tools like GPTZero, an AI detection algorithm made by Edward Tian ’23. While GPTZero is an impressive tool, its accuracy remains untested in a real-world context at this time. Teachers don’t need to rely on the suspicions about esoteric measures such as “perplexity” and “burstiness” that GPTZero may raise; the clear lack of coherence or accuracy from ChatGPT output is a much easier indicator of failure to demonstrate critical thought. These key differences become clearer and even more obvious as a user becomes more familiar with AI-generated text. I anticipate that faculty will learn rather quickly how to spot the aforementioned ‘generated bullshit,’ and assign a commensurate grade without bringing honor into question. In contrast, if faculty were to become reliant on an AI detection tool with contested accuracy because of a ChatGPT ban, the amount of false positives and false negatives may confound a grading process that should be focused on a broader sense of understanding.

It is clear that banning ChatGPT at Princeton University is not only unnecessary—it would be silly. If anyone were foolish enough to turn in a raw ChatGPT-produced essay, it would be easily detectable with other AI tools such as GPTZero, not to mention that the inaccuracies in the text could be easily spotted by any grader. The University needs to see clearly how the limitations of this product allow for a better learning environment that is by no means substantively threatened by plagiarism or dishonesty.

By the way, more than 80% of this column was drafted by ChatGPT. It took me slightly less time to edit and augment than writing an article from scratch, but much of the work of developing ideas remained the same as an original piece. If you run it through GPTZero, it raises some flags, but this column has been so heavily edited that it appears to be human text. My main thesis statement, most importantly, was 100% original, much like any ChatGPT-assisted essay worth turning in.

Christopher Lidard is a sophomore from outside Baltimore, Maryland. A computer science concentrator and tech policy enthusiast, his columns focus on technology issues on campus and at large. He can be reached at clidard@princeton.edu.